Diverting Hate: DHS-Funded Censorship Campaign Targets the Manosphere

Censorship, AI surveillance, and more: A full breakdown of the March 2024 Diverting Hate report.

First of all, THANK YOU for the support! I frequently ask myself why I do this kind of independent research, despite the great effort I spend on it and the very real personal risks involved. Every time I ask myself this, I come to the same answer: I do it because it matters, and I will continue to do so. Nevertheless, support from readers like you helps me keep the lights on, so sincerely, thank you.

With some effort, I was able to locate a Google Drive containing the Diverting Hate report from March 2024. I will update this post to include a new link should they choose to remove access rights from the public one (again), however, I recommend you download the report for yourself using their link:

(EDIT - Within 30 minutes of my reporting, Diverting Hate restricted access to their report. I’ve uploaded it to my own Google Drive here: https://drive.google.com/file/d/1-i_NeesyWl_p7blfVLAYBdhkn05bjCHQ/view)

Introduction

The intro to the Diverting Hate March 2024 report is the same as those that came before it and contains their mission statement. Notably, they removed the images of Rollo Tomassi and Shadaya Knight from the pictogram, replacing it with a picture of Andrew Tate and a tweet from @IncelsCo (the Twitter page belonging to Incels.is owner Lamarcus Small).

Diverting Hate bemoans the Constitutional limitations of government censorship and the social media engagement models that they claim allow problematic influencers to grow. They advocate, in turn, for a “tech-based solution to address this fundamentally technological issue.”

Diverting Hate modeled their program off of Google’s Redirect Method. The Redirect Method was a joint effort between Google’s Jigsaw, a unit within Google that explores “threats to open societies”, which includes disinformation, and an NGO called Moonshot CVE. The method was pioneered in 2016 as a tool to combat ISIS extremism but has since been vastly expanded to encompass disinformation, conspiracy theories, and various other “online harms” that align with the scope of the US counterterrorism program. The Diverting Hate team relied on previous uses of the Redirect Method and on Moonshot’s databases of extremist-related indicators in their program.

There are four strategies outlined in the report:

Placing targeted ads against the creators in question which they hope will lead audiences away from the wrong-think influencers and towards “positive male influences”. These government-approved creators and podcasts include Evryman, MenAlive, Visible Man, and the Man Enough Podcast. Most of these links lead to for-profit sites where the right-think creators sell books, self help courses, and expensive wellness retreats for men. So, the goal of the program is to find ways to divert audiences away from wrong-think and toward right-think, as defined by the US Government.

Collaboration with other NGOs and use of AI surveillance tech to monitor targeted groups, identify new “hate speech” keywords and trends, and target at-risk individuals for diversion measures.

Measuring and monitoring the response to diversion campaigns to continuously refine and enhance.

“Encouraging” social media platforms to embrace the tools and recommendations of the program and use them to drive new content moderation policies.

Case Study: The “Male Supremacy Scale”

Included in the March 2024 report is a formal analysis of the success and viability of the diversion campaign planned against Manosphere creators on YouTube. Diverting Hate teamed up with PERIL at American University to execute this case study. (Read more about PERIL in a previous Substack post here: )

🌱THERE ARE PLANTS - Part 1🌱

Yesterday on Side Scrollers Podcast I was asked if I had names of people who are “plants”, ie., influencers and creators who are recruited by state-funded orgs or PACs and used to push out agendas. The reason I didn’t name them on the spot is because I didn’t want to derail the conversation on this incredibly complex topic. I have done a lot of research…

The Male Supremacy Scale, created by a PERIL researcher, is a 15-item scale to “measure” Male Supremacy which was implemented in the Diverting Hate program to test the efficacy of diversion campaigns. The report confirms that 11 large Manosphere influencers were targeted by diversion campaigns on YouTube. In the study, the team analyzed content and assigned it a quantitative extremism score according to the Male Supremacy Scale. The YouTube channels accounts scored included Rollo Tomassi’s The Rational Male, Fresh & Fit, Pearl Davis, Aaron Clarey, Rich Cooper and more.

Diverting Hate notes that they were unable to conduct analysis on some of these creators - Fresh & Fit, Pearl Davis, Sandman and Legion of Men - due to YouTube’s demonetization of their channels. Later in the report, they lament that demonetization isn’t enough to suppress the creators and advocate for more pro-active censorship measures.

The diversion campaigns against these creators were designed to insert targeted ad for two of the program partners - Man Enough Podcast (hosted by actor Justin Baldoni) and Man Therapy - into their YouTube videos. They ran the campaign starting in February 2024 and then measured the view through rate - “measured by how much of the audience exposed to the ad placement watches the ad beyond the five-second ‘skip ad’ prompt.”

To reiterate, this program, funded by the US Department of Homeland Security, placed ads featuring competitor influencers (program partners) onto YouTube videos of targeted Manosphere creators. That means when you click on one of Rollo Tomassi’s YouTube videos, you might see a state-sponsored advertisement for his competitor, Justin Baldoni.

https://www.youtube.com/c/WeAreManEnough

The Diverting Hate Database

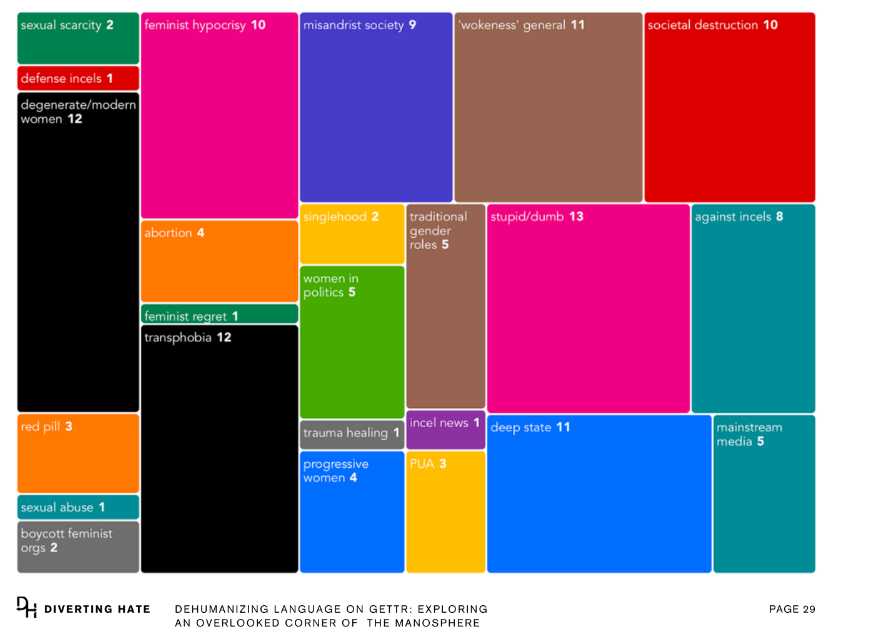

The Diverting Hate report describes a preliminary study conducted on the social media platform Gettr. In the study, DH scraped the platform for misogynistic keywords selected from their database and then used the ATLAS AI system to perform analysis on the data. The stated goal of this study is to “inform policymakers, law enforcement, and other key stakeholders about what extreme misogyny looks like in different communities and on various social media platforms without proper content moderation or enforcement of existing policies.”

In other words, DH intends to use AI to build a massive database of hate speech, then use that data to lobby lawmakers and coerce big tech platforms to structure policy changes around it.

Among the terms considered hate speech are “wahmen”, “Femoid”, “incel”, and “TopG”

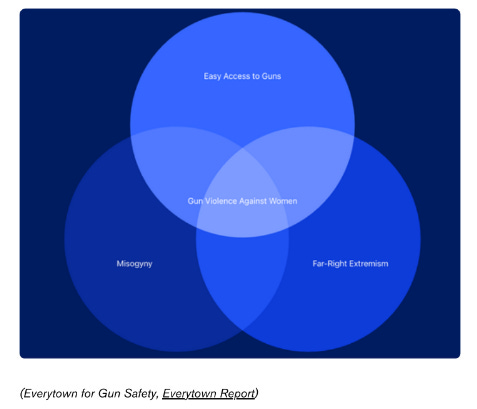

Gun Violence and Misogyny

Section 2 of the March 2024 Diverting Hate report contains an extremely concerning analysis which conflates gun violence to Manosphere and “far-right extremism” hate speech and references a report from Everytown for Gun Safety, an incredibly left-of-center gun control group. DH states, “The report also underscores the importance of gun safety measures and the role of law enforcement in preventing individuals with extremist views from posing threats to public safety.” Is DH advocating the use of social media surveillance to prevent Americans who follow Rollo Tomassi and Andrew Tate from buying guns? Yikes.

Unsurprisingly, DH implies that Manosphere creators are indirectly responsible for mass shootings and targeted attacks against women and marginalized communities while ignoring the WAY more obvious role of accelerationism and foreign terrorist groups.

DH concludes:

“From enhanced monitoring of online platforms to comprehensive gun safety measures and addressing the root causes of gender-based hatred, concerted efforts are required to stem the tide of violence fueled by misogyny and extremism.”

The Big Picture: Coercing Law Makers and Social Media Platforms to Censor Free Speech for the “Greater Good”

Section 3 of the March 2024 Diverting Hate report places much of the blame for the proliferation of Manosphere content onto social media platforms and then advocates for moderation reform. They claim that X, YouTube, TikTok, Facebook, and Instagram have become hotbeds for manosphere content, and that their existing content moderation policies are not good enough.

DH lambasts X CEO Elon Musk for allowing previously banned accounts such as Alex Jones and Donald Trump to return to the platform. They also claim that by monetizing manosphere creators, X isn’t sufficiently enforcing the TOS around their new monetization policy. Paradoxically, they follow this criticism by complaining that demonetization of manosphere accounts doesn’t stop them from growing, and that large accounts can actually exploit their demonetization to victimize themselves and grow even bigger. Their solution? Demonetizing wrong-think creators when they are too small to fight back.

“While a powerful tool in the arsenal of content moderation and mitigation, demonetization should be implemented earlier in the lifespan of hateful accounts to ensure they do not have a big enough audience to be significantly supported and considered ‘martyrs’ in the community.”

DH also cites a Media Matters study highlighting the use of “fan accounts” that boost clips from banned and demonetized influencers and claims such accounts worsen the problem.

The report ends with a list of recommendations. This is where we get a glimpse of the true goal of the program - The adoption of overreaching surveillance tools and expanded moderation policies to censor and suppress legal speech, all under the guise of terrorism prevention.

Here are the recommendations:

Yes, the overall goal of this program is to coerce social media platforms to go to great lengths, including the use of AI facial recognition, to moderate hate speech.

Diverting Hate also includes recommendations for maintaining program OPSEC, such as limiting the research that is made available to the public. Nothing in these reports should be immune from public scrutiny. The inclusion of this note reinforces my stance that the targeted influencers should file a Freedom of Information Act request against the DHS TVTP program.

Again, I do not endorse any of the individuals or belief systems described in this article. In fact, I generally can’t stand the vast majority of the Manosphere, and think that it’s overall kind of goofy. But it doesn’t matter, because the end goal of these programs have nothing to do with helping women or other marginalized groups. The end goal is expanding the definitions of terrorism and extremism to include large, non-violent online communities, and to use this expanded definition to justify expansive government overreach and the advancement/normalization of AI surveillance. The potential for abuse here is enormous and should be taken very seriously by anyone who is serious about their Constitutional rights.

Excellent work. Thank you.